Legislative timeline

- April 2021 – Proposition of a first draft of the regulation by the European Commission.

- December 2023 – Political agreement by the European Parliament (EP) and Member States (through the Council) agreement on the AI Act.

- March 2024 – the EP adopted the AI Act by a large majority.

- May 2024 – Endorsement of the regulation by the Council.

Next steps:

- Publication in the EU’s Official Journal in the coming days.

- The AI act will enter into force twenty days after its publication in the official Journal and be fully applicable 24 months after its entry into force, except for:

- Bans on prohibited practices, (6 months after entry into force);

- Codes of practice (9 months after entry into force);

- General-purpose AI rules including governance (12 months after entry into force);

- And obligations for high-risk systems (36 months after entry into force).

The AI Act “aims to protect fundamental rights, democracy, the rule of law and environmental sustainability from high-risk AI, while boosting innovation and establishing Europe as a leader in the field”. It sets out a standardized framework for how AI systems are utilized and provided within the European Union. The AI Act applies to both public and private AI systems that are either placed on the market or used within the Union. As such, the regulation is aimed at providers of all types of AI systems, as well as those deploying high-risk ones.

The AI act proposal has gathered tremendous attention from the public sphere and lobbies, accumulating the highest number of feedback submissions of all of the European tech bills. The main issues revolve around the qualification of some AI systems and eventual neutralizing effects of the regulation on innovation.

Classification of AI systems’ risks

The implementation of the AI Act will firstly require assessing to which category or categories of risk(s) the concerned AI system belongs. Risks are ventilated as provided below, according either to the purpose of the AI system or to its effects.

Unacceptable risk – violation of EU fundamental rights and values

Induce unacceptable risks: some biometric categorization systems, emotional recognition in the workplace and schools, social scoring, exploitation of vulnerabilities of persons, use of subliminal techniques, real-time remote biometric identification in publicly accessible spaces by law enforcement, individual predictive policing, some untargeted scraping.

High risk – impact on health, safety, or fundamental rights

Annexed to the Act is a list of use cases which are considered to be high-risk.

E.g., profiling of natural persons, critical infrastructure, education and vocational training, employment, essential private and public services, certain systems of law enforcement, systems subjected to a third-party assessment under a sectorial regulation, etc.

Transparency risk – risks of impersonation, manipulation, or deception

AI systems intended to interact directly with natural persons, including general purpose AI models may foster transparency risks. E.g., chatbots, deep fakes, AI-generated content.

Systemic risk of General-purpose AI models – powerful models could cause serious accidents or be misused for far-reaching cyberattacks

General-purpose AI models may foster systemic risks, they are considered to do so it when they are trained using a total computing power of more than 10^25 FLOPs[1]. E.g., OpenAI's GPT-4 and likely Google DeepMind's Gemini

Minimal risk – common AI systems.

Most AI systems currently or likely to be used in the EU. E.g., spam, filters, recommender systems, etc.

Risk-based regimes of AI systems

To each category of risk its own regime. The second step is to determine the consequences of the risk qualification on the AI system: a prohibition, injunctions to implement tailored requirements or voluntary based opportunities.

Unacceptable risk – prohibition

The AI applications that threaten citizens’ rights, will be forbidden. It is not relevant to asses whether other regimes apply.

High risk – conformity assessment, post-market monitoring, etc.

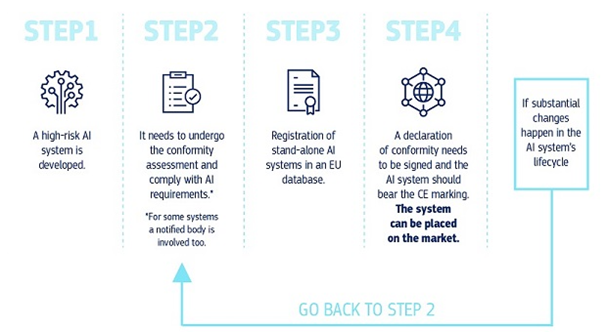

To ensure full compliance with the AI Act, providers of high-risk AI systems must follow two steps.

- Before placing it on the EU market or otherwise putting it into service, providers must subject it to a conformity assessment.

- Implement quality and risk management systems to ensure their compliance with the new requirements and minimise risks for users and affected persons, even after a product is placed on the market.

A deployer will then have to proceed with two further steps:

- Public authorities or entities acting on their behalf will have to register the AI systems in a public EU database, unless those systems are used for law enforcement and migration.

- Deployers must assess and reduce risks, maintain use logs, be transparent and accurate, and ensure human oversight. Citizens will have a right to submit complaints about AI systems and receive explanations about decisions based on high-risk AI systems that affect their rights.

Source: European Commission

Transparency risk – transparency requirements

General-purpose AI (GPAI) systems, and the GPAI models they are based on, must meet certain transparency requirements, including compliance with EU copyright law and publishing detailed summaries of the content used for training. The more powerful GPAI models that could pose systemic risks will face additional requirements, including performing model evaluations, assessing and mitigating systemic risks, and reporting on incidents.

Additionally, artificial or manipulated images, audio or video content (“deepfakes”) need to be clearly labelled as such.

Systemic risk – conformity assessment, post-market monitoring, risk mitigations, etc.

Providers of general-purpose AI models with systemic risk shall:

- Perform model evaluation with a view to identifying and mitigating systemic risk.

- Assess and mitigate possible systemic risks at Union level.

- Keep track of relevant information about serious incidents and possible corrective measures to address them.

- Ensure an adequate level of cybersecurity protection.

Minimal risks – no additional obligations, voluntary based codes of conduct

Exemptions

Are exempted of any obligations: (i) research, development and prototyping activities preceding the release on the market, and (ii) AI systems that are exclusively for military, defense, or national security purposes, regardless of the type of entity carrying out those activities.

Providers of free and open-source modes are also exempted from all obligations unless they induce systemic risks.

The use of biometric identification systems (RBI) by law enforcement is prohibited in principle, except in exhaustively listed and narrowly defined situations. “Real-time” RBI can only be deployed if strict safeguards are met, e.g., its use is limited in time and geographic scope and subject to specific prior judicial or administrative authorisation. Such uses may include, for example, a targeted search of a missing person or preventing a terrorist attack.

Measures to support innovation and SMEs

Regulatory sandboxes and real-world testing will have to be established at the national level, and made accessible to SMEs and start-ups, to develop and train innovative AI before its placement on the market. With regard to the developments in the BENELUX, as of today, the National Commission for Data Protection in Luxembourg (Commission nationale pour la protection des données) had launched a regulatory sandbox which will be open for applications as of 14 June 2024. In addition, the Dutch Data Protection Authority (Autoriteit Persoonsgegevens) indicated that by mid-2026 they intend to launch the Dutch regulatory sandbox to advise providers of AI systems on complex questions about the rules and obligations under the AI Act.

Procedures and fines

Competent authority: Each Member State will designate at least one national authority which will supervise the application of the AI Act and represent the country in the European Artificial Intelligence Board (the Board). At European level, a new European AI Office will be established within the European Commission to supervise general purpose AI models and cooperate with the Board.

Market surveillance authorities will support post-market monitoring of high-risk AI systems through audits and by offering providers the possibility to report on serious incidents or breaches of fundamental rights obligations of which they have become aware. Any market surveillance authority may authorise placing on the market of specific high-risk AI for exceptional reasons.

Fines: Breaching the AI Act will be fined by the designated competent authority. They will take into account the thresholds set out by the regulation, which will be further specified by European Commission guidelines:

- Up to €35m or 7% of the total worldwide annual turnover of the preceding financial year (whichever is higher) for infringements on prohibited practices or non-compliance related to requirements on data.

- Up to €15m or 3% of the total worldwide annual turnover of the preceding financial year for non-compliance with any of the other requirements or obligations of the Regulation, including infringement of the rules on general-purpose AI models.

- Up to €7.5m or 1.5% of the total worldwide annual turnover of the preceding financial year for the supply of incorrect, incomplete or misleading information to notified bodies and national competent authorities in reply to a request.

- For each category of infringement, the threshold would be the lower of the two amounts for SMEs and the higher for other companies.

Users may also lodge a complaint with a national authority about a breach of the AI Act by an AI system provider or displayer.

The authors would like to thank Cécile Manenti for her invaluable contribution to this blogpost.